'Penrith', 'Perth', 'PerthAirport', 'Portland', 'Richmond', 'Sale', 'Nhil', 'NorahHead', 'NorfolkIsland', 'Nuriootpa', 'PearceRAAF', 'Mildura', 'Moree', 'MountGambier', 'MountGinini', 'Newcastle', 'Katherine', 'Launceston', 'Melbourne', 'MelbourneAirport', 'CoffsHarbour', 'Dartmoor', 'Darwin', 'GoldCoast', 'Hobart', 'Ballarat', 'Bendigo', 'Brisbane', 'Cairns', 'Canberra', 'Cobar', [array(['Adelaide', 'Albany', 'Albury', 'AliceSprings', 'BadgerysCreek', This can be done using the train_test_split utility from scikit-learn. When rows in the dataset have no inherent order, it's common practice to pick random subsets of rows for creating test and validation sets. If a separate test set is already provided, you can use a 75%-25% training-validation split. The test set should reflect the kind of data the model will encounter in the real-world, as closely as feasible.Īs a general rule of thumb you can use around 60% of the data for the training set, 20% for the validation set and 20% for the test set. For many datasets, test sets are provided separately. Test set - used to compare different models or approaches and report the model's final accuracy. Picking a good validation set is essential for training models that generalize well. Validation set - used to evaluate the model during training, tune model hyperparameters (optimization technique, regularization etc.), and pick the best version of the model. Training set - used to train the model, i.e., compute the loss and adjust the model's weights using an optimization technique. While building real-world machine learning models, it is quite common to split the dataset into three parts: Successfully installed scikit-learn-0.24.2 threadpoolctl-2.1.0 Successfully uninstalled scikit-learn-0.22.2.post1 Installing collected packages: threadpoolctl, scikit-learnįound existing installation: scikit-learn 0.22.2.post1 Requirement already satisfied, skipping upgrade: joblib>=0.11 in /usr/local/lib/python3.7/dist-packages (from scikit-learn) (1.0.1) Requirement already satisfied, skipping upgrade: scipy>=0.19.1 in /usr/local/lib/python3.7/dist-packages (from scikit-learn) (1.4.1) Requirement already satisfied, skipping upgrade: numpy>=1.13.3 in /usr/local/lib/python3.7/dist-packages (from scikit-learn) (1.19.5)

Logisticregression python jupyter notebook install#

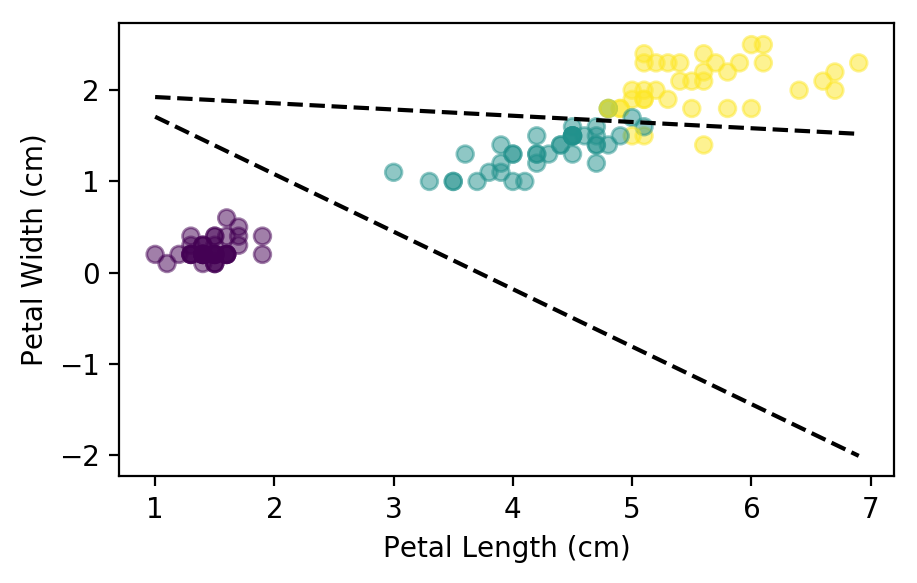

Let's install the scikit-learn library which we'll use to train our model. In this tutorial, we'll train a logistic regression model using the Rain in Australia dataset to predict whether or not it will rain at a location tomorrow, using today's data. Machine learning applied to unlabeled data is known as unsupervised learning ( image source). We repeat steps 1 to 4 till the predictions from the model are good enough.Ĭlassification and regression are both supervised machine learning problems, because they use labeled data.

0 kommentar(er)

0 kommentar(er)